-

Notifications

You must be signed in to change notification settings - Fork 1

Docs

This is a Prometheus Exporter for

exporting NVIDIA GPU metrics. It uses the Go bindings

for NVIDIA Management Library

(NVML) which is a C-based API that can be used for monitoring NVIDIA GPU devices.

Unlike some other similar exporters, it does not call the

nvidia-smi binary.

GPU stats monitoring (https://github.com/swiftdiaries/nvidia_gpu_prometheus_exporter) is an important feature for training and serving. This document describes the design and implementation of components needed.

Monitoring is a crucial component that adds visibility into the infrastructure and it's (near) real-time performance. GPU stats in conjunction with Tensorboard will give a very strong insight into the process of training and separately serving.

Prometheus is widely used for monitoring k8s clusters.

A prometheus exporter is needed so that Prometheus can pull GPU stats.

A dashboard to visualize Prometheus data.

The repository includes nvml.h, so there are no special requirements from the

build environment. go get should be able to build the exporter binary.

go get github.com/mindprince/nvidia_gpu_prometheus_exporter

kubectl create -f https://raw.githubusercontent.com/swiftdiaries/nvidia_gpu_prometheus_exporter/master/nvidia-exporter.yaml

Note: Ensure nvidia-docker is installed.

$ sudo docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

Reference: GitHub - NVIDIA/nvidia-docker: Build and run Docker containers leveraging NVIDIA GPUs

$ kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v1.11/nvidia-device-plugin.yml

Note: It takes a couple of minutes for the drivers to install.

$ kubectl apply --filename https://raw.githubusercontent.com/giantswarm/kubernetes-prometheus/master/manifests-all.yaml

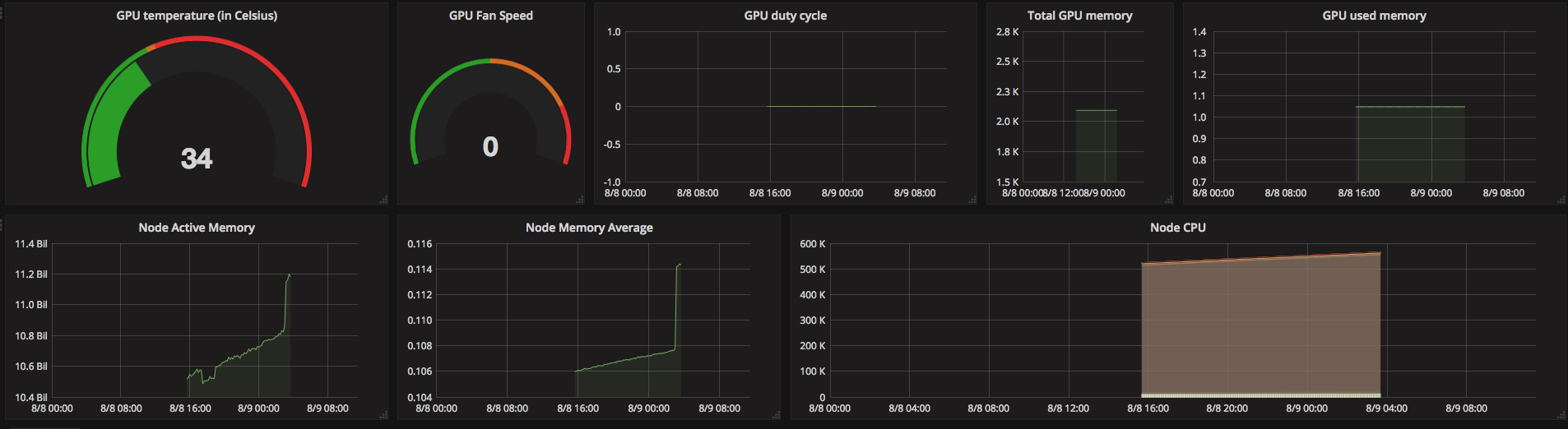

wget https://raw.githubusercontent.com/swiftdiaries/nvidia_gpu_prometheus_exporter/master/Prometheus-GPU-stats-1533769198014.json

Import this JSON to Grafana.

Note: Excuse the flat duty cycle.

Note: Excuse the flat duty cycle.

- Reduce size of image used for exporter.

- Simpler / manageable YAML for Prometheus.

- ksonnet app for easy deployments / integration with Kubeflow.

Note: priority is not necessarily in that order.

$ make build

$ docker run -p 9445:9445 --rm --runtime=nvidia swiftdiaries/gpu_prom_metrics

Make changes, build, iterate.

Verify:

$ localhost:9445/metrics | grep -i "gpu"

Sample output:

# HELP nvidia_gpu_duty_cycle Percent of time over the past sample period during which one or more kernels were executing on the GPU device

# TYPE nvidia_gpu_duty_cycle gauge

nvidia_gpu_duty_cycle{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 0

# HELP nvidia_gpu_fanspeed_percent Fanspeed of the GPU device as a percent of its maximum

# TYPE nvidia_gpu_fanspeed_percent gauge

nvidia_gpu_fanspeed_percent{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 0

# HELP nvidia_gpu_memory_total_bytes Total memory of the GPU device in bytes

# TYPE nvidia_gpu_memory_total_bytes gauge

nvidia_gpu_memory_total_bytes{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 2.092171264e+09

# HELP nvidia_gpu_memory_used_bytes Memory used by the GPU device in bytes

# TYPE nvidia_gpu_memory_used_bytes gauge

nvidia_gpu_memory_used_bytes{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 1.048576e+06

# HELP nvidia_gpu_num_devices Number of GPU devices

# TYPE nvidia_gpu_num_devices gauge

nvidia_gpu_num_devices 1

# HELP nvidia_gpu_power_usage_milliwatts Power usage of the GPU device in milliwatts

# TYPE nvidia_gpu_power_usage_milliwatts gauge

nvidia_gpu_power_usage_milliwatts{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 13240

# HELP nvidia_gpu_temperature_celsius Temperature of the GPU device in celsius

# TYPE nvidia_gpu_temperature_celsius gauge

nvidia_gpu_temperature_celsius{minor_number="0",name="GeForce GTX 950",uuid="GPU-6e7a0fa1-0770-c210-1a5c-8710bc09ce00"} 34

The exporter requires the following:

- access to NVML library (

libnvidia-ml.so.1). - access to the GPU devices.

To make sure that the exporter can access the NVML libraries, either add them

to the search path for shared libraries. Or set LD_LIBRARY_PATH to point to

their location.

By default the metrics are exposed on port 9445. This can be updated using

the -web.listen-address flag.