This repo is a part of the complement material of the paper GateNet: Efficient Deep Neural Network for Gate Perception in Autonomous Drone Racing.

The content is limited since the paper in under review.

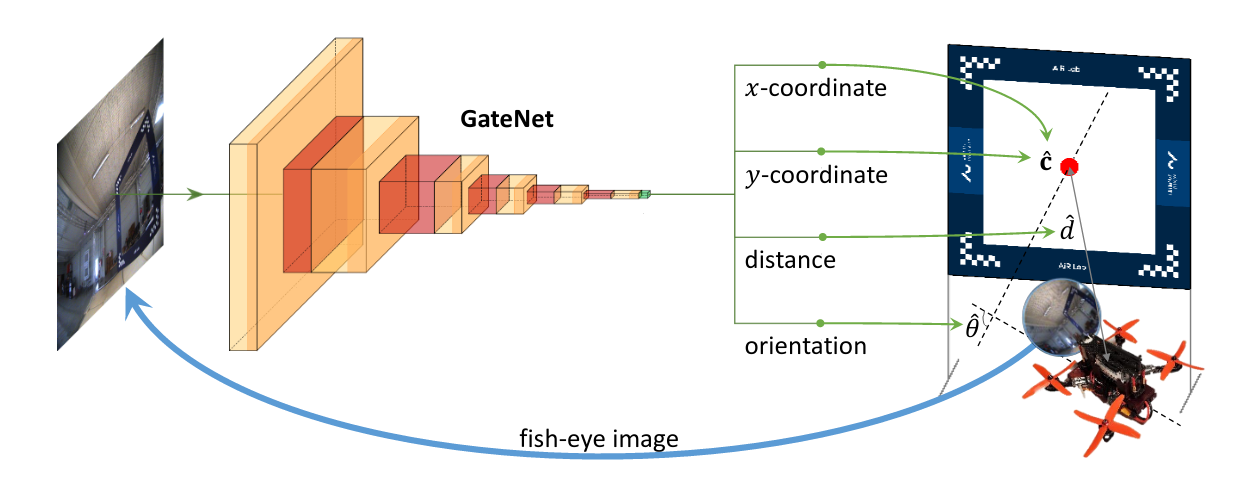

The figure illustrates of the working principles of our gate perception system. The scene images are captured by a single wide-FOV fish-eye RBG camera and fed into GateNet to estimate the gate center location, as well as distance and orientation with respect to the drone's body frame. The information then will be used to re-project the gate in 3D world frame and applied an extended Kalman Filter to achieve stable gate pose estimation.

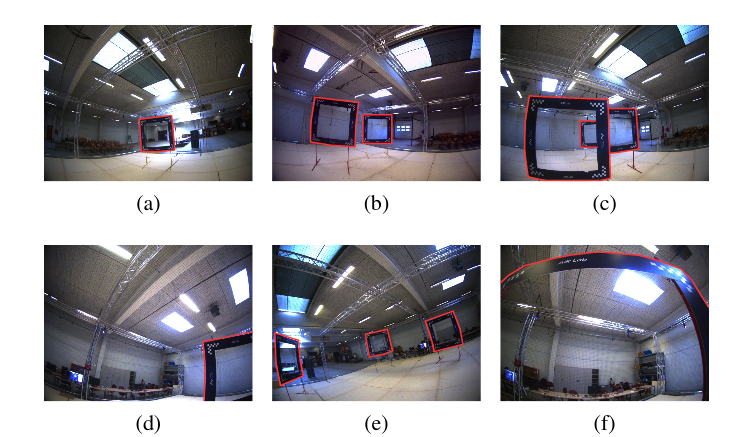

The AU-DR dataset includes different gate layout cases that appear in a drone racing scenario: (a) single gate, (b)multiple gates, (c ) occluded gates, (d) partially observable gates, (e) gates with a distant layout, and (f) a gate that is too close to the drone’s camera.

You can download AU-DR datasets here.

H.Pham, I. Bozcan, A. Sarabakha, S. Haddadin and E. Kayacan, "GateNet: Efficient Deep Neural Network for Gate Perception in Autonomous Drone Racing", submitted to IEEE International Conference on Robotics and Automation (ICRA) 2021.