This code is a PyTorch implementation for paper: CycleGAN-VC3: Examining and Improving CycleGAN-VCs for Mel-spectrogram Conversion, a nice work on Voice-Conversion/Voice Cloning.

- Dataset

- VC

- Usage

- Training

- Example

- Demo

- Reference

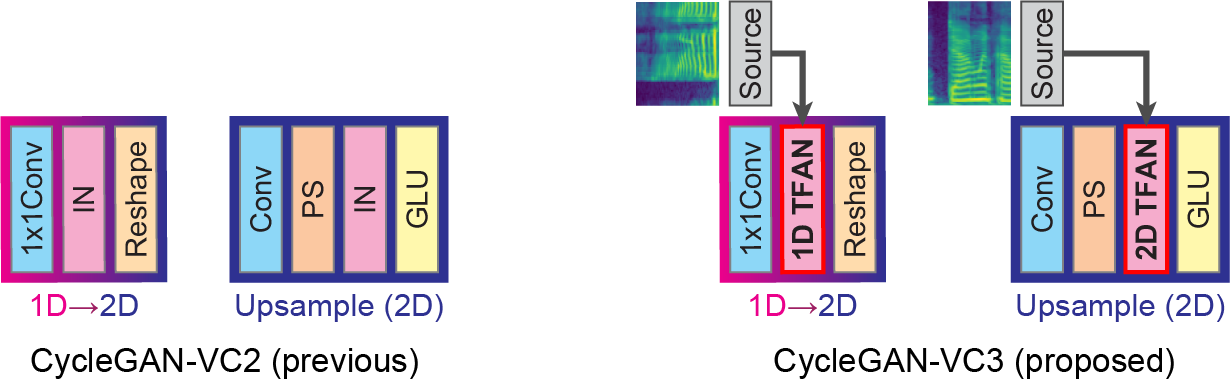

Non-parallel voice conversion (VC) is a technique for learning mappings between source and target speeches without using a parallel corpus. Recently, CycleGAN-VC [3] and CycleGAN-VC2 [2] have shown promising results regarding this problem and have been widely used as benchmark methods. However, owing to the ambiguity of the effectiveness of CycleGAN-VC/VC2 for mel-spectrogram conversion, they are typically used for mel-cepstrum conversion even when comparative methods employ mel-spectrogram as a conversion target. To address this, we examined the applicability of CycleGAN-VC/VC2 to mel-spectrogram conversion. Through initial experiments, we discovered that their direct applications compromised the time-frequency structure that should be preserved during conversion. To remedy this, we propose CycleGAN-VC3, an improvement of CycleGAN-VC2 that incorporates time-frequency adaptive normalization (TFAN). Using TFAN, we can adjust the scale and bias of the converted features while reflecting the time-frequency structure of the source mel-spectrogram. We evaluated CycleGAN-VC3 on inter-gender and intra-gender non-parallel VC. A subjective evaluation of naturalness and similarity showed that for every VC pair, CycleGAN-VC3 outperforms or is competitive with the two types of CycleGAN-VC2, one of which was applied to mel-cepstrum and the other to mel-spectrogram.

This repository contains:

- TFAN module code which implemented the TFAN module

- model code which implemented the model network.

- audio preprocessing script you can use to create cache for training data.

- training scripts to train the model.

pip install -r requirements.txt- CycleGAN-VC3: Examining and Improving CycleGAN-VCs for Mel-spectrogram Conversion. Paper, Project

- CycleGAN-VC2: Improved CycleGAN-based Non-parallel Voice Conversion. Paper, Project

- Parallel-Data-Free Voice Conversion Using Cycle-Consistent Adversarial Networks. Paper, Project

- Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. Paper, Project, Code

- Image-to-Image Translation with Conditional Adversarial Nets. Paper, Project, Code

If this project help you reduce time to develop, you can give me a cup of coffee :)

AliPay(支付宝)

WechatPay(微信)

MIT © Kun