Code for my paper "Semi-Supervised Unconstrained Head Pose Estimation in the Wild". The paper is under review. Please see demos / manuscript / models in the Project / arXiv / HuggingFace, correspondingly.

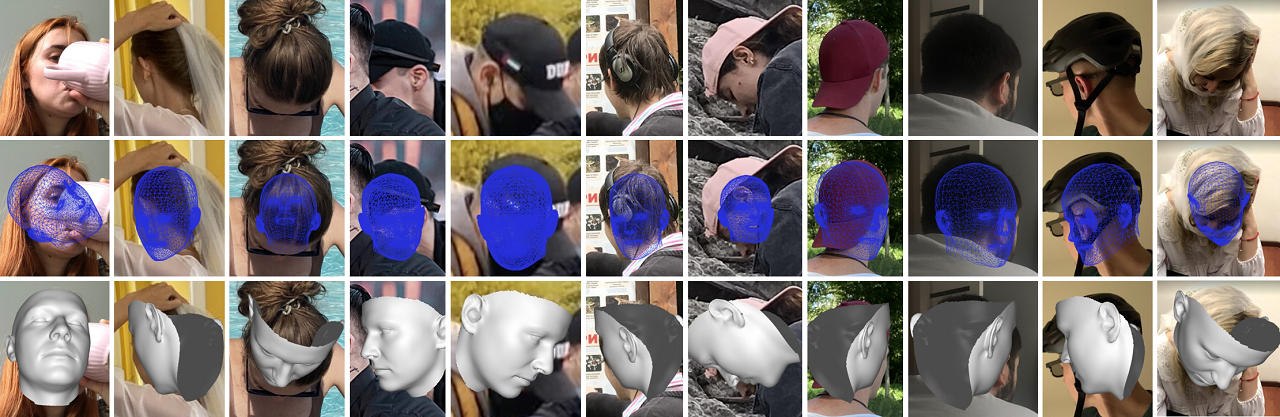

The unconstrained head pose estimation results of our SemiUHPE on wild challenging heads (e.g., heavy blur, extreme illumination, severe occlusion, atypical pose, and invisible face). Qualitative results of our method (3rd line) and DAD-3DNet (2nd line) on heads from DAD-3DHeads test-set (1st line), which never appeared during SSL training.

|

|

|

|

Existing research on unconstrained in-the-wild head pose estimation suffers from the flaws of its datasets, which consist of either numerous samples by non-realistic synthesis or constrained collection, or small-scale natural images yet with plausible manual annotations. To alleviate it, we propose the first semi-supervised unconstrained head pose estimation method SemiUHPE, which can leverage abundant easily available unlabeled head images. Technically, we choose semi-supervised rotation regression and adapt it to the error-sensitive and label-scarce problem of unconstrained head pose. Our method is based on the observation that the aspect-ratio invariant cropping of wild heads is superior to the previous landmark-based affine alignment given that landmarks of unconstrained human heads are usually unavailable, especially for less-explored non-frontal heads. Instead of using an empirically fixed threshold to filter out pseudo labeled heads, we propose dynamic entropy based filtering to adaptively remove unlabeled outliers as training progresses by updating the threshold in multiple stages. We then revisit the design of weak-strong augmentations and improve it by devising two novel head-oriented strong augmentations, termed pose-irrelevant cut-occlusion and pose-altering rotation consistency respectively. Extensive experiments and ablation studies show that SemiUHPE outperforms existing methods greatly on public benchmarks under both the front-range and full-range settings.

| Results of Euler angles errors on AFLW2000 | Results of HPE on the challenging DAD-3DHeads |

|---|---|

|

|

- PyTorch=1.13.1 + torchvision=0.14.1 + cudatoolkit=11.8

- PyTorch3D==0.7.2 (Installation via

condaorpypimay be problematic. Build from source if necessary.) - Remember

cd Sim3DRand install the 3d face mesh render for head pose visualization. - Other dependencies

pip install opencv-python tqdm matplotlib scipy tensorboard configargparse lmdb pyyaml

- For training datasets, please follow our huggingface or hf-mirror.com link to download labeled data (e.g.,

300W-LP & AFLW2000andDAD-3DHeads) and unlabeled data (e.g.,WildHead,COCOHead,CrowdHumanandOpenImageV6). - For pretrained backbones, please download the

RepVGGwight from links.txt. Other used backbones includingResNet50,EfficientNetV2-S,EfficientNet-B4andTinyViT-21Mcan be downloaded automaticly.

- Training Example 1 - Fully Supervised Training (Supervised): 20% labeled 300WLP + unlabeled left 300WLP (not used) + testing on AFLW2000

$ python train.py --config settings/300WLP_AFLW2000.yml --test_set AFLW2000 --network effinetv2 --num_workers 4 --stage1_iteration 60000 --max_iteration 60000 --ss_ratio 0.2 --gpu_ids 0 --batch_size 32 --val_frequency 500 $ python eval.py SSL1.0_r0.2_ce_effinetv2_t-5.3_b32_ema/Julxx_xxxxxx/best \ --config settings/300WLP_AFLW2000.yml --network effinetv2 --batch_size 32 --gpu_ids 0

- Training Example 2 - Semi-Supervised Training (SemiUHPE): 20% labeled 300WLP + unlabeled left 300WLP (used) + testing on AFLW2000

$ python train.py --config settings/300WLP_AFLW2000.yml --network effinetv2 --num_workers 4 --stage1_iteration 60000 \ --max_iteration 100000 --ss_ratio 0.20 --gpu_ids 0 --batch_size 32 --val_frequency 500 --ulb_batch_ratio 4 \ --dynamic_thres --cutout_aug --cutmix_aug --SSL_lambda 1.0 --left_ratio 0.95 $ python eval.py SSL1.0_r0.2_ce_effinetv2_tDyna0.95_b32_ema_CO_CM/Julxx_xxxxxx/best \ --config settings/300WLP_AFLW2000.yml --network effinetv2 --batch_size 32 --gpu_ids 0

- Training Example 3 - Semi-Supervised Training (Baseline): 10% labeled 300WLP + unlabeled left 300WLP (used) + testing on AFLW2000

$ python train.py --config settings/300WLP_AFLW2000.yml --network effinetv2 --num_workers 4 --stage1_iteration 40000 \ --max_iteration 80000 --ss_ratio 0.10 --gpu_ids 1 --batch_size 32 --val_frequency 500 --ulb_batch_ratio 4 --conf_thres -5.4 $ python eval.py SSL1.0_r0.1_ce_effinetv2_t-5.4_b32_ema/Julxx_xxxxxx/best \ --config settings/300WLP_AFLW2000.yml --network effinetv2 --batch_size 32 --gpu_ids 0

- Training Example 4 - Semi-Supervised Training (SemiUHPE): all labeled 300WLP + unlabeled COCOHead + testing on AFLW2000

$ python train.py --config settings/300WLP_COCOHead.yml --network effinetv2 --num_workers 8 --stage1_iteration 90000 \ --max_iteration 120000 --gpu_ids 1 --batch_size 32 --val_frequency 500 --ulb_batch_ratio 8 --dynamic_thres \ --cutout_aug --cutmix_aug --SSL_lambda 1.0 --left_ratio 0.75 $ python eval.py SSL1.0_r0.05_ce_effinetv2_tDyna0.75_b32_ema_CO_CM/Julxx_xxxxxx/best \ --config settings/300WLP_COCOHead.yml --network effinetv2 --batch_size 32 --gpu_ids 0

- Training Example 5 - Semi-Supervised Training (SemiUHPE): labeled DAD3DHeads train-set + unlabeled COCOHead + testing on DAD3DHeads val-set

$ python train.py --config settings/DAD3DHeads_COCOHead.yml --network effinetv2 --num_workers 4 --stage1_iteration 100000 \ --max_iteration 200000 --gpu_ids 1 --batch_size 32 --val_frequency 500 --ulb_batch_ratio 4 --is_full_range \ --dynamic_thres --cutout_aug --cutmix_aug --rotate_aug --SSL_lambda 1.0 --left_ratio 0.75 $ python eval.py SSL1.0_r0.05_ce_effinetv2_tDyna0.75_b32_ema_RO_CO_CM_full/Julxx_xxxxxx/best --is_full_range \ --config settings/DAD3DHeads_COCOHead.yml --network effinetv2 --batch_size 32 --gpu_ids 0

- Evaluation Example 1 (on DAD3DHeads test-set): SemiUHPE + effinetv2-s (WildHead)

$ python eval_DAD3DHeads.py SSL1.0_r0.05_ce_effinetv2_tDyna0.75_b64_ema_RO_CO_CM_full/Julxx_xxxxxx/best \ --is_full_range --config settings/DAD3DHeads_WildHead.yml --network effinetv2 --gpu_ids 1

- Evaluation Example 2 (on DAD3DHeads test-set): SemiUHPE + resnet50 (COCOHead)

Then, the obtained json files should be emailed to [email protected] as in dad_3dheads_benchmark.

$ python eval_DAD3DHeads.py SSL1.0_r0.05_ce_tDyna0.75_b16_ema_RO_CO_CM_full/Sep20_195132/best \ --is_full_range --config settings/DAD3DHeads_COCOHead.yml --network resnet50 --gpu_ids 1

-

Inference Example 1 (on images): SemiUHPE + resnet50 (COCOHead)

$ python image.py DAD-COCOHead-ResNet50-best --is_full_range --config settings/DAD3DHeads_COCOHead.yml \ --network resnet50 --gpu_ids 1

-

Inference Example 2 (on videos): SemiUHPE + effinetv2-s (WildHead)

$ python video.py DAD-WildHead-EffNetV2-S-best --is_full_range --config settings/DAD3DHeads_WildHead.yml \ --network effinetv2 --gpu_ids 1

Note, the corresponding pretrained model weights

DAD-COCOHead-ResNet50-best.pthandDAD-WildHead-EffNetV2-S-best.pthfor unconstrained head pose estimation are released in huggingface or hf-mirror.com.For the used

head detectionmodel weight ch_head_l_1536_e150_best_mMR.pt in image.py and video.py scripts, you can download it from our previous work BPJDetPlus forbody-head joint detectionof multiple persons.Many more impressive demos of images and videos can be found in our project homepage.

Our codebase is partly based on Probabilistic matrix-Fisher, FisherMatch and DAD-3DHeads. The head detection model is based on our previous work BPJDet for multi-person body-head joint detection. The 3d face mesh render is based on 3DDFA_V2 - Sim3DR. We also thank public wild head-related datasets COCOHead, HumanParts, CrowdHuman, BFJDet and OpenImageV6 for their excellent dataset construction and laborious labels annotation.

If you use our code or models in your research, please cite with:

@article{zhou2024semi,

title={Semi-Supervised Unconstrained Head Pose Estimation in the Wild},

author={Zhou, Huayi and Jiang, Fei and Jin, Yuan and Yong, Rui and Lu, Hongtao and Kui, Jia},

journal={arXiv preprint arXiv:2404.02544},

year={2024}

}