Releases: JosefAlbers/Phi-3-Vision-MLX

Phi-3.5-MLX

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Features

- Integration with Phi-3-Vision (multimodal) model

- Support for the Phi-3-Mini-128K (language-only) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Minimum Requirements

Phi-3-MLX is designed to run on Apple Silicon Macs. The minimum requirements are:

- Apple Silicon Mac (M1, M2, or later)

- 8GB RAM (with quantization using

quantize_model=Trueoption)

For optimal performance, especially when working with larger models or datasets, we recommend using a Mac with 16GB RAM or more.

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generate1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Model and Cache Quantization

# Model quantization

generate("Describe the water cycle.", quantize_model=True)

# Cache quantization

generate("Explain quantum computing.", quantize_cache=True)Batch Text Generation

# A list of prompts for batch generation

prompts = [

"Write a haiku about spring.",

"Explain the theory of relativity.",

"Describe a futuristic city."

]

# Generate responses using Phi-3-Vision (multimodal model)

generate(prompts, max_tokens=100)

# Generate responses using Phi-3-Mini-128K (language-only model)

generate(prompts, max_tokens=100, blind_model=True)Constrained Beam Decoding

from phi_3_vision_mlx import constrain

# Use constrain for structured generation (e.g., code, function calls, multiple-choice)

prompts = [

"A 20-year-old woman presents with menorrhagia for the past several years. She says that her menses “have always been heavy”, and she has experienced easy bruising for as long as she can remember. Family history is significant for her mother, who had similar problems with bruising easily. The patient's vital signs include: heart rate 98/min, respiratory rate 14/min, temperature 36.1°C (96.9°F), and blood pressure 110/87 mm Hg. Physical examination is unremarkable. Laboratory tests show the following: platelet count 200,000/mm3, PT 12 seconds, and PTT 43 seconds. Which of the following is the most likely cause of this patient’s symptoms? A: Factor V Leiden B: Hemophilia A C: Lupus anticoagulant D: Protein C deficiency E: Von Willebrand disease",

"A 25-year-old primigravida presents to her physician for a routine prenatal visit. She is at 34 weeks gestation, as confirmed by an ultrasound examination. She has no complaints, but notes that the new shoes she bought 2 weeks ago do not fit anymore. The course of her pregnancy has been uneventful and she has been compliant with the recommended prenatal care. Her medical history is unremarkable. She has a 15-pound weight gain since the last visit 3 weeks ago. Her vital signs are as follows: blood pressure, 148/90 mm Hg; heart rate, 88/min; respiratory rate, 16/min; and temperature, 36.6℃ (97.9℉). The blood pressure on repeat assessment 4 hours later is 151/90 mm Hg. The fetal heart rate is 151/min. The physical examination is significant for 2+ pitting edema of the lower extremity. Which of the following tests o should confirm the probable condition of this patient? A: Bilirubin assessment B: Coagulation studies C: Hematocrit assessment D: Leukocyte count with differential E: 24-hour urine protein"

]

# Define constraints for the generated text

constraints = [(0, '\nThe'), (100, ' The correct answer is'), (1, 'X.')]

# Apply constrained beam decoding

results = constrain(prompts, constraints, blind_model=True, quantize_model=True, use_beam=True)Choosing From Options

from phi_3_vision_mlx import choose

# Select best option from choices for given prompts

prompts = [

"What is the largest planet in our solar system? A: Earth B: Mars C: Jupiter D: Saturn",

"Which element has the chemical symbol 'O'? A: Osmium B: Oxygen C: Gold D: Silver"

]

# For multiple-choice or decision-making tasks

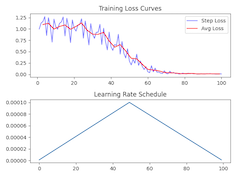

choose(prompts, choices='ABCDE')LoRA Fine-tuning

from phi_3_vision_mlx import train_lora, test_lora

# Train a LoRA adapter

train_lora(

lora_layers=5, # Number of layers to apply LoRA

lora_rank=16, # Rank of the LoRA adaptation

epochs=10, # Number of training epochs

lr=1e-4, # Learning rate

warmup=0.5, # Fraction of steps for learning rate warmup

dataset_path="JosefAlbers/akemiH_MedQA_Reason"

)

# Generate text using the trained LoRA adapter

generate("Describe the potential applications of CRISPR gene editing in medicine.",

blind_model=True,

quantize_model=True,

use_adapter=True)

# Test the performance of the trained LoRA adapter

test_lora()2. Agent Interactions

Multi-turn Conversation

from phi_3_vision_mlx import Agent

# Create an instance of the Agent

agent = Agent()

# First interaction: Analyze an image

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

# Second interaction: Follow-up question

agent('What historical period does this architecture likely belong to?')

# End conversation, clear memory for new interaction

agent.end()Generative Feedback Loop

# Ask the agent to generate and execute code to create a plot

agent('Plot a Lissajous Curve.')

# Ask the agent to modify the generated code and create a new plot

agent('Modify the code to plot 3:4 frequency')

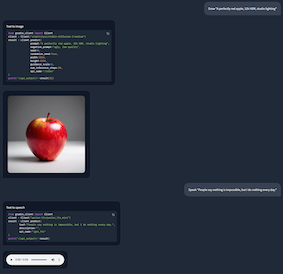

agent.end()External API Tool Use

# Request the agent to generate an image

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

# Request the agent to convert text to speech

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Custom Toolchains

In-Context Learning Agent

from phi_3_vision_mlx import add_text

# Define the toolchain as a string

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create an Agent instance with the custom toolchain

agent = Agent(toolchain, early_stop=100)

# Run the agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio-app/gradio/main/guides/08_gradio-clients-and-lite/01_getting-started-with-the-python-client.md')Retrieval Augmented Coding Agent

from phi_3_vision_mlx import VDB

import datasets

# Simulate user input

user_input = 'Comparison of Sortino Ratio for Bitcoin and Ethereum.'

# Create a custom RAG tool

def rag(prompt, repo_id="JosefAlbers/sharegpt_python_mlx", n_topk=1):

ds = datasets.load_dataset(repo_id, split='train')

vdb = VDB(ds)

context = vdb(prompt, n_topk)[0][0]

return f'{context}\n<|end|>\n<|user|>\nPlot: {prompt}'

# Define the toolchain

toolchain_plot = """

prompt = rag(prompt)

responses = generate(prompt, images)

files = execute(responses, step)

"""

# Create an Agent instance with the RAG toolchain

agent = Agent(toolchain_plot, False)

# Run the agent with the user input

_, images = agent(user_input)Multi-Agent Interaction

# Continued from Example 2 above

agent_writer = Agent(early_stop=100)

agent_writer(f'Write a stock analysis report on: {user_input}', images)External LLM Integration

# Create Agent with Mistral-7B-Instruct-v0.3 instead

agent = Agent(toolchain = "responses, history = mistral_api(prompt, history)")

# Generate a neurology ICU admission note

agent('Write a neurology ICU admission note.')

# Follow-up questions (multi-turn conversation)

agent('Give me the inpatient BP goal for this patient.')

agent('DVT ppx for this patient?')

agent("Patient's prognosis?")

# End

agent.end()Benchmarks

from phi_3_vision_mlx import benchmark

benchmark()| Task | Vanilla Model | Quantized Model | Quantized Cache | LoRA Adapter |

|---|---|---|---|---|

| Text Generation | 24.87 tps | 58.61 tps | 18.47 tps | 24.74 tps |

| Image Captioning | 19.08 tps | 37.43 tps | 3.58 tps | 18.88 tps |

| Batched Generation | 235.79 tps | 147.94 tps | 122.02 tps | 233.09 tps |

(On M1 Max 64GB)

Documentation

API references and additional information are available at:

https://josefalbers.github.io/Phi-3-Vision-MLX/

Also check out our tutorial series available at:

ht...

Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Features

- Integration with Phi-3-Vision (multimodal) model

- Support for the Phi-3-Mini-128K (language-only) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generate1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Batch Text Generation

# A list of prompts for batch generation

prompts = [

"Explain the key concepts of quantum computing and provide a Rust code example demonstrating quantum superposition.",

"Write a poem about the first snowfall of the year.",

"Summarize the major events of the French Revolution.",

"Describe a bustling alien marketplace on a distant planet with unique goods and creatures."

"Implement a basic encryption algorithm in Python.",

]

# Generate responses using Phi-3-Vision (multimodal model)

generate(prompts, max_tokens=100)

# Generate responses using Phi-3-Mini-128K (language-only model)

generate(prompts, max_tokens=100, blind_model=True)Constrained (Beam Search) Decoding

The constrain function allows for structured generation, which can be useful for tasks like code generation, function calling, chain-of-thought prompting, or multiple-choice question answering.

from phi_3_vision_mlx import constrain

# Define the prompt

prompt = "Write a Python function to calculate the Fibonacci sequence up to a given number n."

# Define constraints

constraints = [

(100, "\n```python\n"), # Start of code block

(100, " return "), # Ensure a return statement

(200, "\n```"), # End of code block

]

# Apply constrained decoding using the 'constrain' function from phi_3_vision_mlx.

constrain(prompt, constraints)The constrain function can also guide the model to provide reasoning before concluding with an answer. This approach can be especially helpful for multiple-choice questions, such as those in the Massive Multitask Language Understanding (MMLU) benchmark, where the model's thought process is as crucial as its final selection.

prompts = [

"A 20-year-old woman presents with menorrhagia for the past several years. She says that her menses “have always been heavy”, and she has experienced easy bruising for as long as she can remember. Family history is significant for her mother, who had similar problems with bruising easily. The patient's vital signs include: heart rate 98/min, respiratory rate 14/min, temperature 36.1°C (96.9°F), and blood pressure 110/87 mm Hg. Physical examination is unremarkable. Laboratory tests show the following: platelet count 200,000/mm3, PT 12 seconds, and PTT 43 seconds. Which of the following is the most likely cause of this patient’s symptoms? A: Factor V Leiden B: Hemophilia A C: Lupus anticoagulant D: Protein C deficiency E: Von Willebrand disease",

"A 25-year-old primigravida presents to her physician for a routine prenatal visit. She is at 34 weeks gestation, as confirmed by an ultrasound examination. She has no complaints, but notes that the new shoes she bought 2 weeks ago do not fit anymore. The course of her pregnancy has been uneventful and she has been compliant with the recommended prenatal care. Her medical history is unremarkable. She has a 15-pound weight gain since the last visit 3 weeks ago. Her vital signs are as follows: blood pressure, 148/90 mm Hg; heart rate, 88/min; respiratory rate, 16/min; and temperature, 36.6℃ (97.9℉). The blood pressure on repeat assessment 4 hours later is 151/90 mm Hg. The fetal heart rate is 151/min. The physical examination is significant for 2+ pitting edema of the lower extremity. Which of the following tests o should confirm the probable condition of this patient? A: Bilirubin assessment B: Coagulation studies C: Hematocrit assessment D: Leukocyte count with differential E: 24-hour urine protein"

]

# Apply vanilla constrained decoding

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True, use_beam=False)

# Apply constrained beam decoding (ACB)

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True, use_beam=True)The constraints encourage a structured response that includes the thought process, making the output more informative and transparent:

< Generated text for prompt #0 >

The most likely cause of this patient's menorrhagia and easy bruising is E: Von Willebrand disease. The correct answer is Von Willebrand disease.

< Generated text for prompt #1 >

The patient's hypertension, edema, and weight gain are concerning for preeclampsia. The correct answer is E: 24-hour urine protein.

Multiple Choice Selection

The choose function provides a straightforward way to select the best option from a set of choices for a given prompt. This is particularly useful for multiple-choice questions or decision-making scenarios.

from phi_3_vision_mlx import choose

prompt = "What is the capital of France? A: London B: Berlin C: Paris D: Madrid E: Rome"

result = choose(prompt)

print(result) # Output: 'C'

# Using with custom choices

custom_prompt = "Which color is associated with stopping at traffic lights? R: Red Y: Yellow G: Green"

custom_result = choose(custom_prompt, choices='RYG')

print(custom_result) # Output: 'R'

# Batch processing

prompts = [

"What is the largest planet in our solar system? A: Earth B: Mars C: Jupiter D: Saturn",

"Which element has the chemical symbol 'O'? A: Osmium B: Oxygen C: Gold D: Silver"

]

batch_results = choose(prompts)

print(batch_results) # Output: ['C', 'B']Model and Cache Quantization

# Model quantization

generate("Describe the water cycle.", quantize_model=True)

# Cache quantization

generate("Explain quantum computing.", quantize_cache=True)(Q)LoRA Fine-tuning

Training a LoRA Adapter

from phi_3_vision_mlx import train_lora

train_lora(

lora_layers=5, # Number of layers to apply LoRA

lora_rank=16, # Rank of the LoRA adaptation

epochs=10, # Number of training epochs

lr=1e-4, # Learning rate

warmup=0.5, # Fraction of steps for learning rate warmup

dataset_path="JosefAlbers/akemiH_MedQA_Reason"

)Generating Text with LoRA

generate("Describe the potential applications of CRISPR gene editing in medicine.",

blind_model=True,

quantize_model=True,

use_adapter=True)Comparing LoRA Adapters

from phi_3_vision_mlx import test_lora

# Test model without LoRA adapter

test_lora(adapter_path=None)

# Output score: 0.6 (6/10)

# Test model with the trained LoRA adapter (using default path)

test_lora(adapter_path=True)

# Output score: 0.8 (8/10)

# Test model with a specific LoRA adapter path

test_lora(adapter_path="/path/to/your/lora/adapter")2. Agent Interactions

Multi-turn Conversation

from phi_3_vision_mlx import Agent

# Create an instance of the Agent

agent = Agent()

# First interaction: Analyze an image

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

# Second interaction: Follow-up question

agent('What historical period does this architecture likely belong to?')

# End the conversation: This clears the agent's memory and prepares it for a new conversation

agent.end()Generative Feedback Loop

# Ask the agent to generate and execute code to create a plot

agent('Plot a Lissajous Curve.')

# Ask the agent to modify the generated code and create a new plot

agent('Modify the code to plot 3:4 frequency')

agent.end()External API Tool Use

# Request the agent to generate an image

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

# Request the agent to convert text to speech

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Custom Toolchains

Example 1. In-Context Learning Agent

from phi_3_vision_mlx import add_text

# Define the toolchain as a string

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create an Agent instance with the custom toolchain

agent = Agent(toolchain, early_stop=100)

# Run the agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio...Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the recently updated (July 2, 2024) Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Features

- Support for the newly updated Phi-3-Mini-128K (language-only) model

- Integration with Phi-3-Vision (multimodal) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generate1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Batch Text Generation

# A list of prompts for batch generation

prompts = [

"Explain the key concepts of quantum computing and provide a Rust code example demonstrating quantum superposition.",

"Write a poem about the first snowfall of the year.",

"Summarize the major events of the French Revolution.",

"Describe a bustling alien marketplace on a distant planet with unique goods and creatures."

"Implement a basic encryption algorithm in Python.",

]

# Generate responses using Phi-3-Vision (multimodal model)

generate(prompts, max_tokens=100)

# Generate responses using Phi-3-Mini-128K (language-only model)

generate(prompts, max_tokens=100, blind_model=True)Constrained (Beam Search) Decoding

The constrain function allows for structured generation, which can be useful for tasks like code generation, function calling, chain-of-thought prompting, or multiple-choice question answering.

from phi_3_vision_mlx import constrain

# Define the prompt

prompt = "Write a Python function to calculate the Fibonacci sequence up to a given number n."

# Define constraints

constraints = [

(100, "\n```python\n"), # Start of code block

(100, " return "), # Ensure a return statement

(200, "\n```")], # End of code block

# Apply constrained decoding using the 'constrain' function from phi_3_vision_mlx.

constrain(prompt, constraints)The constrain function can also guide the model to provide reasoning before concluding with an answer. This approach can be especially helpful for multiple-choice questions, such as those in the Massive Multitask Language Understanding (MMLU) benchmark, where the model's thought process is as crucial as its final selection.

prompts = [

"A 20-year-old woman presents with menorrhagia for the past several years. She says that her menses “have always been heavy”, and she has experienced easy bruising for as long as she can remember. Family history is significant for her mother, who had similar problems with bruising easily. The patient's vital signs include: heart rate 98/min, respiratory rate 14/min, temperature 36.1°C (96.9°F), and blood pressure 110/87 mm Hg. Physical examination is unremarkable. Laboratory tests show the following: platelet count 200,000/mm3, PT 12 seconds, and PTT 43 seconds. Which of the following is the most likely cause of this patient’s symptoms? A: Factor V Leiden B: Hemophilia A C: Lupus anticoagulant D: Protein C deficiency E: Von Willebrand disease",

"A 25-year-old primigravida presents to her physician for a routine prenatal visit. She is at 34 weeks gestation, as confirmed by an ultrasound examination. She has no complaints, but notes that the new shoes she bought 2 weeks ago do not fit anymore. The course of her pregnancy has been uneventful and she has been compliant with the recommended prenatal care. Her medical history is unremarkable. She has a 15-pound weight gain since the last visit 3 weeks ago. Her vital signs are as follows: blood pressure, 148/90 mm Hg; heart rate, 88/min; respiratory rate, 16/min; and temperature, 36.6℃ (97.9℉). The blood pressure on repeat assessment 4 hours later is 151/90 mm Hg. The fetal heart rate is 151/min. The physical examination is significant for 2+ pitting edema of the lower extremity. Which of the following tests o should confirm the probable condition of this patient? A: Bilirubin assessment B: Coagulation studies C: Hematocrit assessment D: Leukocyte count with differential E: 24-hour urine protein"]

# Apply vanilla constrained decoding

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True, use_beam=False)

# Apply constrained beam decoding (ACB)

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True, use_beam=True)The constraints encourage a structured response that includes the thought process, making the output more informative and transparent:

< Generated text for prompt #0 >

The most likely cause of this patient's menorrhagia and easy bruising is E: Von Willebrand disease. The correct answer is Von Willebrand disease.

< Generated text for prompt #1 >

The patient's hypertension, edema, and weight gain are concerning for preeclampsia. The correct answer is E: 24-hour urine protein.

Multiple Choice Selection

The choose function provides a straightforward way to select the best option from a set of choices for a given prompt. This is particularly useful for multiple-choice questions or decision-making scenarios.

from phi_3_vision_mlx import choose

prompt = "What is the capital of France? A: London B: Berlin C: Paris D: Madrid E: Rome"

result = choose(prompt)

print(result) # Output: 'C'

# Using with custom choices

custom_prompt = "Which color is associated with stopping at traffic lights? R: Red Y: Yellow G: Green"

custom_result = choose(custom_prompt, choices='RYG')

print(custom_result) # Output: 'R'

# Batch processing

prompts = [

"What is the largest planet in our solar system? A: Earth B: Mars C: Jupiter D: Saturn",

"Which element has the chemical symbol 'O'? A: Osmium B: Oxygen C: Gold D: Silver"

]

batch_results = choose(prompts)

print(batch_results) # Output: ['C', 'B']Model and Cache Quantization

# Model quantization

generate("Describe the water cycle.", quantize_model=True)

# Cache quantization

generate("Explain quantum computing.", quantize_cache=True)(Q)LoRA Fine-tuning

Training a LoRA Adapter

from phi_3_vision_mlx import train_lora

train_lora(

lora_layers=5, # Number of layers to apply LoRA

lora_rank=16, # Rank of the LoRA adaptation

epochs=10, # Number of training epochs

lr=1e-4, # Learning rate

warmup=0.5, # Fraction of steps for learning rate warmup

dataset_path="JosefAlbers/akemiH_MedQA_Reason"

)Generating Text with LoRA

generate("Describe the potential applications of CRISPR gene editing in medicine.",

blind_model=True,

quantize_model=True,

use_adapter=True)Comparing LoRA Adapters

from phi_3_vision_mlx import test_lora

# Test model without LoRA adapter

test_lora(adapter_path=None)

# Output score: 0.6 (6/10)

# Test model with the trained LoRA adapter (using default path)

test_lora(adapter_path=True)

# Output score: 0.8 (8/10)

# Test model with a specific LoRA adapter path

test_lora(adapter_path="/path/to/your/lora/adapter")2. Agent Interactions

Multi-turn Conversation

from phi_3_vision_mlx import Agent

# Create an instance of the Agent

agent = Agent()

# First interaction: Analyze an image

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

# Second interaction: Follow-up question

agent('What historical period does this architecture likely belong to?')

# End the conversation: This clears the agent's memory and prepares it for a new conversation

agent.end()Generative Feedback Loop

# Ask the agent to generate and execute code to create a plot

agent('Plot a Lissajous Curve.')

# Ask the agent to modify the generated code and create a new plot

agent('Modify the code to plot 3:4 frequency')

agent.end()External API Tool Use

# Request the agent to generate an image

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

# Request the agent to convert text to speech

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Custom Toolchains

Example 1. In-Context Learning Agent

from phi_3_vision_mlx import add_text

# Define the toolchain as a string

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create an Agent instance with the custom toolchain

agent = Agent(toolchain, early_stop=100)

...Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the recently updated (July 2, 2024) Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Features

- Support for the newly updated Phi-3-Mini-128K (language-only) model

- Integration with Phi-3-Vision (multimodal) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generate1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Batch Text Generation

# A list of prompts for batch generation

prompts = [

"Explain the key concepts of quantum computing and provide a Rust code example demonstrating quantum superposition.",

"Write a poem about the first snowfall of the year.",

"Summarize the major events of the French Revolution.",

"Describe a bustling alien marketplace on a distant planet with unique goods and creatures."

"Implement a basic encryption algorithm in Python.",

]

# Generate responses using Phi-3-Vision (multimodal model)

generate(prompts, max_tokens=100)

# Generate responses using Phi-3-Mini-128K (language-only model)

generate(prompts, max_tokens=100, blind_model=True)Constrained (Beam Search) Decoding

The constrain function allows for structured generation, which can be useful for tasks like code generation, function calling, chain-of-thought prompting, or multiple-choice question answering.

from phi_3_vision_mlx import constrain

# Define the prompt

prompt = "Write a Python function to calculate the Fibonacci sequence up to a given number n."

# Define constraints

constraints = [

(100, "\n```python\n"), # Start of code block

(100, " return "), # Ensure a return statement

(200, "\n```")], # End of code block

# Apply constrained decoding using the 'constrain' function from phi_3_vision_mlx.

constrain(prompt, constraints)The constrain function can also guide the model to provide reasoning before concluding with an answer. This approach can be especially helpful for multiple-choice questions, such as those in the Massive Multitask Language Understanding (MMLU) benchmark, where the model's thought process is as crucial as its final selection.

prompts = [

"A 20-year-old woman presents with menorrhagia for the past several years. She says that her menses “have always been heavy”, and she has experienced easy bruising for as long as she can remember. Family history is significant for her mother, who had similar problems with bruising easily. The patient's vital signs include: heart rate 98/min, respiratory rate 14/min, temperature 36.1°C (96.9°F), and blood pressure 110/87 mm Hg. Physical examination is unremarkable. Laboratory tests show the following: platelet count 200,000/mm3, PT 12 seconds, and PTT 43 seconds. Which of the following is the most likely cause of this patient’s symptoms? A: Factor V Leiden B: Hemophilia A C: Lupus anticoagulant D: Protein C deficiency E: Von Willebrand disease",

"A 25-year-old primigravida presents to her physician for a routine prenatal visit. She is at 34 weeks gestation, as confirmed by an ultrasound examination. She has no complaints, but notes that the new shoes she bought 2 weeks ago do not fit anymore. The course of her pregnancy has been uneventful and she has been compliant with the recommended prenatal care. Her medical history is unremarkable. She has a 15-pound weight gain since the last visit 3 weeks ago. Her vital signs are as follows: blood pressure, 148/90 mm Hg; heart rate, 88/min; respiratory rate, 16/min; and temperature, 36.6℃ (97.9℉). The blood pressure on repeat assessment 4 hours later is 151/90 mm Hg. The fetal heart rate is 151/min. The physical examination is significant for 2+ pitting edema of the lower extremity. Which of the following tests o should confirm the probable condition of this patient? A: Bilirubin assessment B: Coagulation studies C: Hematocrit assessment D: Leukocyte count with differential E: 24-hour urine protein"]

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True)The constraints encourage a structured response that includes the thought process, making the output more informative and transparent:

< Generated text for prompt #0 >

The most likely cause of this patient's menorrhagia and easy bruising is E: Von Willebrand disease. The correct answer is Von Willebrand disease.

< Generated text for prompt #1 >

The patient's hypertension, edema, and weight gain are concerning for preeclampsia. The correct answer is E: 24-hour urine protein.

Multiple Choice Selection

The choose function provides a straightforward way to select the best option from a set of choices for a given prompt. This is particularly useful for multiple-choice questions or decision-making scenarios.

from phi_3_vision_mlx import choose

prompt = "What is the capital of France? A: London B: Berlin C: Paris D: Madrid E: Rome"

result = choose(prompt)

print(result) # Output: 'C'

# Using with custom choices

custom_prompt = "Which color is associated with stopping at traffic lights? R: Red Y: Yellow G: Green"

custom_result = choose(custom_prompt, choices='RYG')

print(custom_result) # Output: 'R'

# Batch processing

prompts = [

"What is the largest planet in our solar system? A: Earth B: Mars C: Jupiter D: Saturn",

"Which element has the chemical symbol 'O'? A: Osmium B: Oxygen C: Gold D: Silver"

]

batch_results = choose(prompts)

print(batch_results) # Output: ['C', 'B']Model and Cache Quantization

# Model quantization

generate("Describe the water cycle.", quantize_model=True)

# Cache quantization

generate("Explain quantum computing.", quantize_cache=True)(Q)LoRA Fine-tuning

Training a LoRA Adapter

from phi_3_vision_mlx import train_lora

train_lora(

lora_layers=5, # Number of layers to apply LoRA

lora_rank=16, # Rank of the LoRA adaptation

epochs=10, # Number of training epochs

lr=1e-4, # Learning rate

warmup=0.5, # Fraction of steps for learning rate warmup

dataset_path="JosefAlbers/akemiH_MedQA_Reason"

)Generating Text with LoRA

generate("Describe the potential applications of CRISPR gene editing in medicine.",

blind_model=True,

quantize_model=True,

use_adapter=True)Comparing LoRA Adapters

from phi_3_vision_mlx import test_lora

# Test model without LoRA adapter

test_lora(adapter_path=None)

# Output score: 0.6 (6/10)

# Test model with the trained LoRA adapter (using default path)

test_lora(adapter_path=True)

# Output score: 0.8 (8/10)

# Test model with a specific LoRA adapter path

test_lora(adapter_path="/path/to/your/lora/adapter")2. Agent Interactions

Multi-turn Conversation

from phi_3_vision_mlx import Agent

# Create an instance of the Agent

agent = Agent()

# First interaction: Analyze an image

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

# Second interaction: Follow-up question

agent('What historical period does this architecture likely belong to?')

# End the conversation: This clears the agent's memory and prepares it for a new conversation

agent.end()Generative Feedback Loop

# Ask the agent to generate and execute code to create a plot

agent('Plot a Lissajous Curve.')

# Ask the agent to modify the generated code and create a new plot

agent('Modify the code to plot 3:4 frequency')

agent.end()External API Tool Use

# Request the agent to generate an image

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

# Request the agent to convert text to speech

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Custom Toolchains

Example 1. In-Context Learning Agent

from phi_3_vision_mlx import add_text

# Define the toolchain as a string

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create an Agent instance with the custom toolchain

agent = Agent(toolchain, early_stop=100)

# Run the agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio-app/gradio/main/guides/08_gradio-clients-and-lite/01_getting-started-with-the-python-client.md')Example 2. Retrieval ...

Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the recently updated (July 2, 2024) Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Features

- Support for the newly updated Phi-3-Mini-128K (language-only) model

- Integration with Phi-3-Vision (multimodal) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generate1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Batch Text Generation

# A list of prompts for batch generation

prompts = [

"Explain the key concepts of quantum computing and provide a Rust code example demonstrating quantum superposition.",

"Write a poem about the first snowfall of the year.",

"Summarize the major events of the French Revolution.",

"Describe a bustling alien marketplace on a distant planet with unique goods and creatures."

"Implement a basic encryption algorithm in Python.",

]

# Generate responses using Phi-3-Vision (multimodal model)

generate(prompts, max_tokens=100)

# Generate responses using Phi-3-Mini-128K (language-only model)

generate(prompts, max_tokens=100, blind_model=True)Model and Cache Quantization

# Model quantization

generate("Describe the water cycle.", quantize_model=True)

# Cache quantization

generate("Explain quantum computing.", quantize_cache=True)Structured Generation Using Constrained Decoding (WIP)

The constrain function allows for structured generation, which can be useful for tasks like code generation, function calling, chain-of-thought prompting, or multiple-choice question answering.

from phi_3_vision_mlx import constrain

# Define the prompt

prompt = "Write a Python function to calculate the Fibonacci sequence up to a given number n."

# Define constraints

constraints = [

(100, "\n```python\n"), # Start of code block

(100, " return "), # Ensure a return statement

(200, "\n```")], # End of code block

# Apply constrained decoding using the 'constrain' function from phi_3_vision_mlx.

constrain(prompt, constraints)The constrain function can also guide the model to provide reasoning before concluding with an answer. This approach can be especially helpful for multiple-choice questions, such as those in the Massive Multitask Language Understanding (MMLU) benchmark, where the model's thought process is as crucial as its final selection.

prompts = [

"A 20-year-old woman presents with menorrhagia for the past several years. She says that her menses “have always been heavy”, and she has experienced easy bruising for as long as she can remember. Family history is significant for her mother, who had similar problems with bruising easily. The patient's vital signs include: heart rate 98/min, respiratory rate 14/min, temperature 36.1°C (96.9°F), and blood pressure 110/87 mm Hg. Physical examination is unremarkable. Laboratory tests show the following: platelet count 200,000/mm3, PT 12 seconds, and PTT 43 seconds. Which of the following is the most likely cause of this patient’s symptoms? A: Factor V Leiden B: Hemophilia A C: Lupus anticoagulant D: Protein C deficiency E: Von Willebrand disease",

"A 25-year-old primigravida presents to her physician for a routine prenatal visit. She is at 34 weeks gestation, as confirmed by an ultrasound examination. She has no complaints, but notes that the new shoes she bought 2 weeks ago do not fit anymore. The course of her pregnancy has been uneventful and she has been compliant with the recommended prenatal care. Her medical history is unremarkable. She has a 15-pound weight gain since the last visit 3 weeks ago. Her vital signs are as follows: blood pressure, 148/90 mm Hg; heart rate, 88/min; respiratory rate, 16/min; and temperature, 36.6℃ (97.9℉). The blood pressure on repeat assessment 4 hours later is 151/90 mm Hg. The fetal heart rate is 151/min. The physical examination is significant for 2+ pitting edema of the lower extremity. Which of the following tests o should confirm the probable condition of this patient? A: Bilirubin assessment B: Coagulation studies C: Hematocrit assessment D: Leukocyte count with differential E: 24-hour urine protein"]

constrain(prompts, constraints=[(30, ' The correct answer is'), (10, 'X.')], blind_model=True, quantize_model=True)The constraints encourage a structured response that includes the thought process, making the output more informative and transparent:

< Generated text for prompt #0 >

The most likely cause of this patient's menorrhagia and easy bruising is E: Von Willebrand disease. The correct answer is Von Willebrand disease.

< Generated text for prompt #1 >

The patient's hypertension, edema, and weight gain are concerning for preeclampsia. The correct answer is E: 24-hour urine protein.

(Q)LoRA Fine-tuning

Training a (Q)LoRA Adapter

from phi_3_vision_mlx import train_lora

train_lora(

lora_layers=5, # Number of layers to apply LoRA

lora_rank=16, # Rank of the LoRA adaptation

epochs=10, # Number of training epochs

lr=1e-4, # Learning rate

warmup=0.5, # Fraction of steps for learning rate warmup

dataset_path="JosefAlbers/akemiH_MedQA_Reason"

)Generating Text with (Q)LoRA

generate("Describe the potential applications of CRISPR gene editing in medicine.",

blind_model=True,

quantize_model=True,

use_adapter=True)Comparing (Q)LoRA Adapters

from phi_3_vision_mlx import test_lora

# Test model without LoRA adapter

test_lora(adapter_path=None)

# Output score: 0.6 (6/10)

# Test model with the trained LoRA adapter (using default path)

test_lora(adapter_path=True)

# Output score: 0.8 (8/10)

# Test model with a specific LoRA adapter path

test_lora(adapter_path="/path/to/your/lora/adapter")2. Agent Interactions

Multi-turn Conversation

from phi_3_vision_mlx import Agent

# Create an instance of the Agent

agent = Agent()

# First interaction: Analyze an image

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

# Second interaction: Follow-up question

agent('What historical period does this architecture likely belong to?')

# End the conversation: This clears the agent's memory and prepares it for a new conversation

agent.end()Generative Feedback Loop

# Ask the agent to generate and execute code to create a plot

agent('Plot a Lissajous Curve.')

# Ask the agent to modify the generated code and create a new plot

agent('Modify the code to plot 3:4 frequency')

agent.end()External API Tool Use

# Request the agent to generate an image

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

# Request the agent to convert text to speech

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Custom Toolchains

Example 1. In-Context Learning Agent

from phi_3_vision_mlx import _load_text

# Create a custom tool named 'add_text'

def add_text(prompt):

prompt, path = prompt.split('@')

return f'{_load_text(path)}\n<|end|>\n<|user|>{prompt}'

# Define the toolchain as a string

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create an Agent instance with the custom toolchain

agent = Agent(toolchain, early_stop=100)

# Run the agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio-app/gradio/main/guides/08_gradio-clients-and-lite/01_getting-started-with-the-python-client.md')Example 2. Retrieval Augmented Coding Agent

from phi_3_vision_mlx import VDB

import datasets

# Simulate user input

user_input = 'Comparison of Sortino Ratio for Bitcoin and Ethereum.'

# Create a custom RAG tool

def rag(prompt, repo_id="JosefAlbers/sharegpt_python_mlx", n_topk=1):

ds = datasets.load_dataset(repo_id, split='train')

vdb = VDB(ds)

context = vdb(prompt, n_topk)[0][0]

return f'{context}\n<|end|>\n<|user|>Plot: {prompt}'

# Define the toolchain

toolchain_plot = """

prompt = rag(prompt)

responses = generate(prompt, images)

files = execute(responses, step)

"""

# Create an Agent instance with the RAG toolchain

agent = Agent(toolchain_plot, False)

...Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX: Language and Vision Models for Apple Silicon

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the recently updated (July 2, 2024) Phi-3-Mini-128K language model, optimized for Apple Silicon using the MLX framework. This project provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution.

Recent Updates: Phi-3 Mini Improvements

Microsoft has recently released significant updates to the Phi-3 Mini model, dramatically improving its capabilities:

- Substantially enhanced code understanding in Python, C++, Rust, and TypeScript

- Improved post-training for better-structured output

- Enhanced multi-turn instruction following

- Added support for the

<|system|>tag - Improved reasoning and long-context understanding

Features

- Support for the newly updated Phi-3-Mini-128K (language-only) model

- Integration with Phi-3-Vision (multimodal) model

- Optimized performance on Apple Silicon using MLX

- Batched generation for processing multiple prompts

- Flexible agent system for various AI tasks

- Custom toolchains for specialized workflows

- Model quantization for improved efficiency

- LoRA fine-tuning capabilities

- API integration for extended functionality (e.g., image generation, text-to-speech)

Quick Start

Install and launch Phi-3-MLX from command line:

pip install phi-3-vision-mlx

phi3vTo instead use the library in a Python script:

from phi_3_vision_mlx import generateUsage Examples

1. Core Functionalities

Visual Question Answering

generate('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')Batch Generation

prompts = [

"Explain the key concepts of quantum computing and provide a Rust code example demonstrating quantum superposition.",

"Write a poem about the first snowfall of the year.",

"Summarize the major events of the French Revolution.",

"Describe a bustling alien marketplace on a distant planet with unique goods and creatures."

"Implement a basic encryption algorithm in Python.",

]

# `Phi-3-Vision

generate(prompts, max_tokens=100)

# `Phi-3-Mini-128K

generate(prompts, max_tokens=100, blind_model=True)Model and Cache Quantization

# `Model quantization

generate("Explain the implications of quantum entanglement in quantum computing.", quantize_model=True)

# `Cache quantization

generate("Describe the potential applications of CRISPR gene editing in medicine.", quantize_cache=True)LoRA Fine-tuning

from phi_3_vision_mlx import train_lora

train_lora(lora_layers=5, lora_rank=16, epochs=10, lr=1e-4, warmup=.5, mask_ratios=[.0], adapter_path='adapters', dataset_path = "JosefAlbers/akemiH_MedQA_Reason")generate("Write a cosmic horror.", adapter_path='adapters')2. Agent Interactions

Multi-turn Conversations and Context Handling

from phi_3_vision_mlx import Agent

agent = Agent()

agent('Analyze this image and describe the architectural style:', 'https://images.metmuseum.org/CRDImages/rl/original/DP-19531-075.jpg')

agent('What historical period does this architecture likely belong to?')

agent.end()Generative Feedback Loop

agent('Plot a Lissajous Curve.')

agent('Modify the code to plot 3:4 frequency')

agent.end()Extending Capabilities with API Integration

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()3. Toolchain Customization

Example 1. In-Context Learning

from phi_3_vision_mlx import load_text

# Create tool

def add_text(prompt):

prompt, path = prompt.split('@')

return f'{load_text(path)}\n<|end|>\n<|user|>{prompt}'

# Chain tools

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create agent

agent = Agent(toolchain, early_stop=100)

# Run agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio-app/gradio/main/guides/08_gradio-clients-and-lite/01_getting-started-with-the-python-client.md')Example 2. Retrieval Augmented Coding

from phi_3_vision_mlx import VDB

import datasets

# User proxy

user_input = 'Comparison of Sortino Ratio for Bitcoin and Ethereum.'

# Create tool

def rag(prompt, repo_id="JosefAlbers/sharegpt_python_mlx", n_topk=1):

ds = datasets.load_dataset(repo_id, split='train')

vdb = VDB(ds)

context = vdb(prompt, n_topk)[0][0]

return f'{context}\n<|end|>\n<|user|>Plot: {prompt}'

# Chain tools

toolchain_plot = """

prompt = rag(prompt)

responses = generate(prompt, images)

files = execute(responses, step)

"""

# Create agent

agent = Agent(toolchain_plot, False)

# Run agent

_, images = agent(user_input)Example 3. Multi-Agent Interaction

# Continued from Example 2

agent_writer = Agent(early_stop=100)

agent_writer(f'Write a stock analysis report on: {user_input}', images)Benchmarks

from phi_3_vision_mlx import benchmark

benchmark()| Task | Vanilla Model | Quantized Model | Quantized Cache | LoRA Adapter |

|---|---|---|---|---|

| Text Generation | 8.72 tps | 55.97 tps | 7.04 tps | 8.71 tps |

| Image Captioning | 8.04 tps | 32.48 tps | 1.77 tps | 8.00 tps |

| Batched Generation | 30.74 tps | 106.94 tps | 20.47 tps | 30.72 tps |

(On an M1 Max 64GB)

License

This project is licensed under the MIT License.

Citation

Phi-3-Vision for Apple MLX

Phi-3-Vision for Apple MLX

Phi-3-Vision for Apple MLX is a powerful and flexible AI agent framework that leverages the Phi-3-Vision model to perform a wide range of tasks, from visual question answering to code generation and execution. This project aims to provide an easy-to-use interface for interacting with the Phi-3-Vision model, while also offering advanced features like custom toolchains and model quantization.

Phi-3-Vision is a state-of-the-art vision-language model that excels in understanding and generating content based on both textual and visual inputs. By integrating this model with Apple's MLX framework, we provide a high-performance solution optimized for Apple silicon.

Quick Start

1. Install Phi-3 Vision MLX:

To install Phi-3-Vision-MLX, run the following command:

pip install phi-3-vision-mlx2. Launch Phi-3 Vision MLX:

To launch Phi-3-Vision-MLX:

phi3vOr in a Python script:

from phi_3_vision_mlx import Agent

agent = Agent()Usage

Visual Question Answering (VQA)

agent('What is shown in this image?', 'https://collectionapi.metmuseum.org/api/collection/v1/iiif/344291/725918/main-image')

agent.end()Generative Feedback Loop

The agent can be used to generate code, execute it, and then modify it based on feedback:

agent('Plot a Lissajous Curve.')

agent('Modify the code to plot 3:4 frequency')

agent.end()API Tool Use

You can use the agent to create images or generate speech using API calls:

agent('Draw "A perfectly red apple, 32k HDR, studio lighting"')

agent.end()

agent('Speak "People say nothing is impossible, but I do nothing every day."')

agent.end()Custom Toolchain

Toolchains allow you to customize the agent's behavior for specific tasks. Here are three examples:

Example 1: In-Context Learning (ICL)

You can create a custom toolchain to add context to the prompt:

from phi_3_vision_mlx import load_text

# Create tool

def add_text(prompt):

prompt, path = prompt.split('@')

return f'{load_text(path)}\n<|end|>\n<|user|>{prompt}'

# Chain tools

toolchain = """

prompt = add_text(prompt)

responses = generate(prompt, images)

"""

# Create agent

agent = Agent(toolchain, early_stop=100)

# Run agent

agent('How to inspect API endpoints? @https://raw.githubusercontent.com/gradio-app/gradio/main/guides/08_gradio-clients-and-lite/01_getting-started-with-the-python-client.md')This toolchain adds context to the prompt from an external source, enhancing the agent's knowledge for specific queries.

Example 2: Retrieval Augmented Generation (RAG)

You can create another custom toolchain for retrieval-augmented generation (RAG) to code:

from phi_3_vision_mlx import VDB

import datasets

# User proxy

user_input = 'Comparison of Sortino Ratio for Bitcoin and Ethereum.'

# Create tool

def rag(prompt, repo_id="JosefAlbers/sharegpt_python_mlx", n_topk=1):

ds = datasets.load_dataset(repo_id, split='train')

vdb = VDB(ds)

context = vdb(prompt, n_topk)[0][0]

return f'{context}\n<|end|>\n<|user|>Plot: {prompt}'

# Chain tools

toolchain_plot = """

prompt = rag(prompt)

responses = generate(prompt, images)

files = execute(responses, step)

"""

# Create agent

agent = Agent(toolchain_plot, False)

# Run agent

_, images = agent(user_input)Example 3: Multi-Agent Interaction

You can also have multiple agents interacting to complete a task:

agent_writer = Agent(early_stop=100)

agent_writer(f'Write a stock analysis report on: {user_input}', images)Batch Generation

For efficient processing of multiple prompts:

from phi_3_vision_mlx import generate

generate([

"Write an executive summary for a communications business plan",

"Write a resume.",

"Write a mystery horror.",

"Write a Neurology ICU Admission Note.",])Model and Cache Quantization

Quantization can significantly reduce model size and improve inference speed:

generate("Write a cosmic horror.", quantize_cache=True)

generate("Write a cosmic horror.", quantize_model=True)LoRA Training and Inference

Fine-tune the model for specific tasks:

from phi_3_vision_mlx import train_lora

train_lora(lora_layers=5, lora_rank=16, epochs=10, lr=1e-4, warmup=.5, mask_ratios=[.0], adapter_path='adapters', dataset_path = "JosefAlbers/akemiH_MedQA_Reason")Use the fine-tuned model:

generate("Write a cosmic horror.", adapter_path='adapters')Benchmarks

| Task | Vanilla Model | Quantized Model | Quantized Cache | LoRA |

|---|---|---|---|---|

| Text Generation | 8.72 tps | 55.97 tps | 7.04 tps | 8.71 tps |

| Image Captioning | 8.04 tps | 32.48 tps | 1.77 tps | 8.00 tps |

| Batched Generation | 30.74 tps | 106.94 tps | 20.47 tps | 30.72 tps |

License

This project is licensed under the MIT License.

Citation

v0.0.4

Phi-3-Vision VLM Model for Apple MLX: An All-in-One Port

This project brings the powerful phi-3-vision VLM to Apple's MLX framework, offering a comprehensive solution for various text and image processing tasks. With a focus on simplicity and efficiency, this implementation offers a straightforward and minimalistic integration of the VLM model. It seamlessly incorporates essential functionalities such as generating quantized model weights, optimizing KV cache quantization during inference, facilitating LoRA/QLoRA training, and conducting model benchmarking, all encapsulated within a single file for convenient access and usage.

Key Features

- Batch Generation: Accelerate inference by generating text for multiple prompts concurrently (107 tokens-per-sec batched vs 56 tokens-per-sec original)

- Model Quantization: Reduce model size for faster loading and deployment (2.3GB quantized vs 8.5GB original full-precision).

- Cache Quantization: Optimize inference for processing long contexts with key-value cache quantization.

- Chat Template: Utilize chat template for streamlining interactions with the model.

- LoRA Training: Easily customize the model for specific tasks or datasets using LoRA.

- Benchmarking: Quickly assess model performance on any dataset. (WIP)

- Su-scaled RoPE: Manages sequences of up to 128K tokens.

- VLM Agent: Leverages VLM's visual understanding for interactive code generation and refinement, enabling data visualization and image manipulation through a visual feedback loop. (WIP)

- Long Context RAG: Enables the integration of Retrieval-Augmented Generation to harness large amounts of external knowledge for complex tasks such as code understanding, leveraging the phi-3-vision model's 128K context window. (WIP)

Quick Start

VLM Agent (WIP)

VLM's understanding of both text and visuals enables interactive generation and modification of plots/images, opening up new possibilities for GUI development and data visualization.

# from phi_3_vision_mlx import chatui

chatui()Visual Question Answering (VQA)

Simply drag and drop screenshot images from clipboard into the chatui textbox or upload images files for VQA.

Or,

# from phi_3_vision_mlx import chat

chat('What is shown in this image?', 'https://assets-c4akfrf5b4d3f4b7.z01.azurefd.net/assets/2024/04/BMDataViz_661fb89f3845e.png')Click to expand output

The image displays a bar chart with percentages on the vertical axis ranging from 0% to 100%, and various statements on the horizontal axis. Each bar represents the percentage of respondents who agree with the corresponding statement. The statements include 'Having clear goals for a meeting', 'Knowing where to find information', 'Having more focus on summarization', 'Understand information I need', 'Having tools to prepare for meetings', and 'Having clearPrompt: 377.97 tokens-per-sec (3103 tokens / 8.2 sec)

Generation: 8.04 tokens-per-sec (100 tokens / 12.3 sec)

Batched Generation

Paddings for each input prompt and their corresponding attention masks, and position IDs are properly handled by the generate function to ensure correct model behavior.

chat([

"Write an executive summary for a communications business plan",

"Write a resume.",

"Write a mystery horror.",

"Write a Neurology ICU Admission Note.",])Click to expand output

< Generated text for prompt #0 > Title: Communications Business PlanExecutive Summary:

Our communications business plan aims to establish a leading provider of communication solutions for businesses and individuals. We will focus on delivering high-quality, reliable, and cost-effective communication services, including voice, video, and data services. Our services will be tailored to meet the unique needs of our customers, and we will offer a range of packages and plans to suit different budgets and requirements.

< Generated text for prompt #1 >

Title: [Your Name]Contact Information:

Email: [Your Email]

Phone: [Your Phone]Objective:

To obtain a position as a [Your Desired Position] in [Your Industry/Company] that utilizes my skills and experience to contribute to the success of the organization.

Education:

[Your Name]

[Your Degree]

[Your Major]

[Your University]

[Year< Generated text for prompt #2 >

Title: The Haunting of Hillcrest ManorIn the small, sleepy town of Crestwood, nestled at the edge of a dense forest, stood an imposing manor known as Hillcrest Manor. The manor had been abandoned for decades, its once grand facade now crumbling and overgrown with ivy. Whispers of its dark past and the mysterious disappearance of its former inhabitants had become the stuff of local

< Generated text for prompt #3 >

Neurology ICU Admission NotePatient: John Doe

Date: [Insert Date]

Time: [Insert Time]

Chief Complaint: Severe headache, nausea, and vomiting

History of Present Illness: The patient presented to the emergency department with a severe headache, nausea, and vomiting. The headache was described as a constant, throbbing pain that was worse

Prompt: 134.22 tokens-per-sec (80 tokens / 0.6 sec)

Generation: 30.74 tokens-per-sec (400 tokens / 13.0 sec)

Cache Quantization

chat("Write a cosmic horror.", quantize_cache=True)Click to expand output

Title: The Echoes of the VoidIn the depths of the cosmic abyss, where the stars are but distant memories and the black hole's pull is a relentless force, there exists a realm of unimaginable horror. This is the realm of The Echoes of the Void, a place where the very fabric of reality is distorted and the line between the living and the dead is blurred.

The Echo

Prompt: 45.88 tokens-per-sec (14 tokens / 0.3 sec)

Generation: 6.82 tokens-per-sec (100 tokens / 14.5 sec)

Model Quantization

chat("Write a cosmic horror.", quantize_model=True)Click to expand output

Title: The Eye of the VoidThe night was dark and cold, and the stars shone brightly in the sky above. The wind howled through the trees, carrying with it the scent of death and decay.

In the heart of the forest, a lone figure stood, staring into the abyss. His name was John, and he had been drawn to this place by a mysterious force that he could not explain.

As he stood there

Prompt: 149.99 tokens-per-sec (14 tokens / 0.1 sec)

Generation: 53.36 tokens-per-sec (100 tokens / 1.9 sec)

LoRA Training

# from phi_3_vision_mlx import train_lora

train_lora(lora_layers=5, lora_rank=16, epochs=10, lr=1e-4, warmup=.5, mask_ratios=[.0], adapter_path='adapters', dataset_path = "JosefAlbers/akemiH_MedQA_Reason")LoRA Inference

chat("Write a cosmic horror.", adapter_path='adapters')Click to expand output

Title: The Echoes of the VoidIn the depths of the cosmic abyss, where the stars are but distant memories and the black hole's pull is a relentless force, there exists a realm of unimaginable horror. This is the realm of The Echoes of the Void, a place where the very fabric of reality is distorted and the line between life and death is blurred.

The Echoes of

Prompt: 36.87 tokens-per-sec (14 tokens / 0.4 sec)

Generation: 8.56 tokens-per-sec (100 tokens / 11.6 sec)

LoRA Testing (WIP)

# from phi_3_vision_mlx import recall

test_lora(dataset_path="JosefAlbers/akemiH_MedQA_Reason"):Click to expand output

Question: A 23-year-old pregnant woman at 22 weeks gestation presents with burning upon urination. She states it started 1 day ago and has been worsening despite drinking more water and taking cranberry extract. She otherwise feels well and is followed by a doctor for her pregnancy. Her temperature is 97.7°F (36.5°C), blood pressure is 122/77 mmHg, pulse is 80/min, respirations are 19/min, and oxygen saturation is 98% on room air. Physical exam is notable for an absence of costovertebral angle tenderness and a gravid uterus. Which of the following is the best treatment for this patient? - Taught: Nitrofurantoin is the best treatment for a pregnant patient with a likely urinary tract infection, due to its efficacy and safety profile during pregnancy. - Recall: Nitrofurantoin is the best treatment for a pregnant patient with a likely urinary tract infection, due to its efficacy - Answer: E - Attenmpt: E - Correct: True Question: A 3-month-old baby died suddenly at night while asleep. His mother noticed that he had died only after she awoke in the morning. No cause of death was determined based on the autopsy. Which of the following precautions could have prevented the death of the baby? - Taught: Placing infants in a supine position on a firm mattress during sleep is recommended to reduce the risk of sudden infant death syndrome (SIDS). - Recall: Placing infants in a supine position on a firm mattress during sleep is recommended to reduce the risk of sudden infant death syndrome ( - Answer: A - Attenmpt: A - Correct: True Question: A mother brings her 3-week-old infant to the pediatrician's office because she is concerned about his feeding habits. He was born without complica...

Phi-3-Vision VLM Agent

Phi-3-Vision VLM Model for Apple MLX: An All-in-One Port

This project brings the powerful phi-3-vision VLM to Apple's MLX framework, offering a comprehensive solution for various text and image processing tasks. With a focus on simplicity and efficiency, this implementation offers a straightforward and minimalistic integration of the VLM model. It seamlessly incorporates essential functionalities such as generating quantized model weights, optimizing KV cache quantization during inference, facilitating LoRA/QLoRA training, and conducting model benchmarking, all encapsulated within a single file for convenient access and usage.

Key Features

- Batch Generation: Accelerate inference by generating text for multiple prompts concurrently (107 tokens-per-sec batched vs 56 tokens-per-sec original)

- Model Quantization: Reduce model size for faster loading and deployment (2.3GB quantized vs 8.5GB original full-precision).

- Cache Quantization: Optimize inference for processing long contexts with key-value cache quantization.

- Chat Template: Utilize chat template for streamlining interactions with the model.

- LoRA Training: Easily customize the model for specific tasks or datasets using LoRA.

- Benchmarking: Quickly assess model performance on any dataset. (WIP)

- Su-scaled RoPE: Manages sequences of up to 128K tokens.

- VLM Agent: Leverages VLM's visual understanding for interactive code generation and refinement, enabling data visualization and image manipulation through a visual feedback loop. (WIP)

- Long Context RAG: Enables the integration of Retrieval-Augmented Generation to harness large amounts of external knowledge for complex tasks such as code understanding, leveraging the phi-3-vision model's 128K context window. (WIP)

Quick Start

VLM Agent (WIP)

VLM's understanding of both text and visuals enables interactive generation and modification of plots/images, opening up new possibilities for GUI development and data visualization.

# from phi_3_vision_mlx import chatui

chatui()Image Captioning

# from phi_3_vision_mlx import chat

chat('What is shown in this image?', 'https://assets-c4akfrf5b4d3f4b7.z01.azurefd.net/assets/2024/04/BMDataViz_661fb89f3845e.png')Click to expand output

The image displays a bar chart with percentages on the vertical axis ranging from 0% to 100%, and various statements on the horizontal axis. Each bar represents the percentage of respondents who agree with the corresponding statement. The statements include 'Having clear goals for a meeting', 'Knowing where to find information', 'Having more focus on summarization', 'Understand information I need', 'Having tools to prepare for meetings', and 'Having clearPrompt: 377.97 tokens-per-sec (3103 tokens / 8.2 sec)

Generation: 8.04 tokens-per-sec (100 tokens / 12.3 sec)

Batched Generation

Paddings for each input prompt and their corresponding attention masks, and position IDs are properly handled by the generate function to ensure correct model behavior.

chat([

"Write an executive summary for a communications business plan",

"Write a resume.",

"Write a mystery horror.",

"Write a Neurology ICU Admission Note.",])Click to expand output

< Generated text for prompt #0 > Title: Communications Business PlanExecutive Summary:

Our communications business plan aims to establish a leading provider of communication solutions for businesses and individuals. We will focus on delivering high-quality, reliable, and cost-effective communication services, including voice, video, and data services. Our services will be tailored to meet the unique needs of our customers, and we will offer a range of packages and plans to suit different budgets and requirements.

< Generated text for prompt #1 >

Title: [Your Name]Contact Information:

Email: [Your Email]

Phone: [Your Phone]Objective:

To obtain a position as a [Your Desired Position] in [Your Industry/Company] that utilizes my skills and experience to contribute to the success of the organization.

Education:

[Your Name]

[Your Degree]

[Your Major]

[Your University]

[Year< Generated text for prompt #2 >

Title: The Haunting of Hillcrest ManorIn the small, sleepy town of Crestwood, nestled at the edge of a dense forest, stood an imposing manor known as Hillcrest Manor. The manor had been abandoned for decades, its once grand facade now crumbling and overgrown with ivy. Whispers of its dark past and the mysterious disappearance of its former inhabitants had become the stuff of local

< Generated text for prompt #3 >

Neurology ICU Admission NotePatient: John Doe

Date: [Insert Date]

Time: [Insert Time]

Chief Complaint: Severe headache, nausea, and vomiting

History of Present Illness: The patient presented to the emergency department with a severe headache, nausea, and vomiting. The headache was described as a constant, throbbing pain that was worse

Prompt: 134.22 tokens-per-sec (80 tokens / 0.6 sec)

Generation: 30.74 tokens-per-sec (400 tokens / 13.0 sec)

Cache Quantization

chat("Write a cosmic horror.", quantize_cache=True)Click to expand output

Title: The Echoes of the VoidIn the depths of the cosmic abyss, where the stars are but distant memories and the black hole's pull is a relentless force, there exists a realm of unimaginable horror. This is the realm of The Echoes of the Void, a place where the very fabric of reality is distorted and the line between the living and the dead is blurred.

The Echo

Prompt: 45.88 tokens-per-sec (14 tokens / 0.3 sec)

Generation: 6.82 tokens-per-sec (100 tokens / 14.5 sec)

Model Quantization

chat("Write a cosmic horror.", quantize_model=True)Click to expand output

Title: The Eye of the VoidThe night was dark and cold, and the stars shone brightly in the sky above. The wind howled through the trees, carrying with it the scent of death and decay.

In the heart of the forest, a lone figure stood, staring into the abyss. His name was John, and he had been drawn to this place by a mysterious force that he could not explain.

As he stood there

Prompt: 149.99 tokens-per-sec (14 tokens / 0.1 sec)

Generation: 53.36 tokens-per-sec (100 tokens / 1.9 sec)

LoRA Training

# from phi_3_vision_mlx import train_lora

train_lora(lora_layers=5, lora_rank=16, epochs=10, lr=1e-4, warmup=.5, mask_ratios=[.0], adapter_path='adapters', dataset_path = "JosefAlbers/akemiH_MedQA_Reason")LoRA Inference

chat("Write a cosmic horror.", adapter_path='adapters')Click to expand output

Title: The Echoes of the VoidIn the depths of the cosmic abyss, where the stars are but distant memories and the black hole's pull is a relentless force, there exists a realm of unimaginable horror. This is the realm of The Echoes of the Void, a place where the very fabric of reality is distorted and the line between life and death is blurred.

The Echoes of

Prompt: 36.87 tokens-per-sec (14 tokens / 0.4 sec)

Generation: 8.56 tokens-per-sec (100 tokens / 11.6 sec)

LoRA Testing (WIP)

# from phi_3_vision_mlx import recall

test_lora(dataset_path="JosefAlbers/akemiH_MedQA_Reason"):Click to expand output

Question: A 23-year-old pregnant woman at 22 weeks gestation presents with burning upon urination. She states it started 1 day ago and has been worsening despite drinking more water and taking cranberry extract. She otherwise feels well and is followed by a doctor for her pregnancy. Her temperature is 97.7°F (36.5°C), blood pressure is 122/77 mmHg, pulse is 80/min, respirations are 19/min, and oxygen saturation is 98% on room air. Physical exam is notable for an absence of costovertebral angle tenderness and a gravid uterus. Which of the following is the best treatment for this patient? - Taught: Nitrofurantoin is the best treatment for a pregnant patient with a likely urinary tract infection, due to its efficacy and safety profile during pregnancy. - Recall: Nitrofurantoin is the best treatment for a pregnant patient with a likely urinary tract infection, due to its efficacy - Answer: E - Attenmpt: E - Correct: True Question: A 3-month-old baby died suddenly at night while asleep. His mother noticed that he had died only after she awoke in the morning. No cause of death was determined based on the autopsy. Which of the following precautions could have prevented the death of the baby? - Taught: Placing infants in a supine position on a firm mattress during sleep is recommended to reduce the risk of sudden infant death syndrome (SIDS). - Recall: Placing infants in a supine position on a firm mattress during sleep is recommended to reduce the risk of sudden infant death syndrome ( - Answer: A - Attenmpt: A - Correct: True Question: A mother brings her 3-week-old infant to the pediatrician's office because she is concerned about his feeding habits. He was born without complications and has not had any medical problems up until this time. However, for the past 4 days, he has been fussy, is regurgitating all of his feeds, and his vomit is yellow in color. On physical exam, the child's abdomen is minimally distended but no other abnormalities are appreciat...

Phi-3-Vision VLM Model for Apple MLX: An All-in-One Port

Phi-3-Vision VLM Model for Apple MLX: An All-in-One Port

This project brings the powerful phi-3-vision VLM to Apple's MLX framework, offering a comprehensive solution for various text and image processing tasks. With a focus on simplicity and efficiency, this implementation offers a straightforward and minimalistic integration of the VLM model. It seamlessly incorporates essential functionalities such as generating quantized model weights, optimizing KV cache quantization during inference, facilitating LoRA/QLoRA training, and conducting model benchmarking, all encapsulated within a single file for convenient access and usage.

Key Features

- Su-scaled RoPE: Implements Su-scaled Rotary Position Embeddings to manage sequences of up to 128K tokens.

- Model Quantization: Reduce model size for faster loading and deployment (2.3GB quantized vs 8.5GB original).

- KV Cache Quantization: Optimize inference for processing long contexts with minimal overhead (5.3s quantized vs 5.1s original).

- LoRA Training: Easily customize the model for specific tasks or datasets using LoRA.

- Benchmarking: Quickly assess model performance on any dataset (WIP).

Usage

prompt = "<|user|>\n<|image_1|>\nWhat is shown in this image?<|end|>\n<|assistant|>\n"

images = [Image.open(requests.get("https://assets-c4akfrf5b4d3f4b7.z01.azurefd.net/assets/2024/04/BMDataViz_661fb89f3845e.png" , stream=True).raw)]Image Captioning

model, processor = load()

generate(model, processor, prompt, images)The image displays a bar chart with percentages on the vertical axis ranging from 0% to 100%, and various statements on the horizontal axis. Each bar represents the percentage of respondents who agree with the corresponding statement.<|end|><|endoftext|>

Prompt: 10.689 tokens-per-sec

Generation: 3.703 tokens-per-sec

4.85s user 6.92s system 65% cpu 17.919 totalCache Quantization