-

Notifications

You must be signed in to change notification settings - Fork 26

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Serve] Add Cpp Deployment in Ray Serve #34

base: main

Are you sure you want to change the base?

Conversation

a8c21e7

to

fcc5d72

Compare

|

@MingYueQ sorry for the delay on responding to the RFC. We have been putting out fires for Ray 2.6 release & have a company retreat this week. I will have a detailed look next week and get back to you. The main concern is going to be around maintenance burden — the existing Java integrations are written in a way that causes some headaches because we have a lot of branches in the code that are special-cased for Java. If we decide to go ahead with the CPP extension, we’ll need to make sure to improve this across all three languages. |

|

@edoakes Thank you for your reply. We have the following suggestions regarding your concerns:

|

|

@edoakes Thank you for your reply. At the time when Java Deployment was supported, there was not a good abstraction for multiple languages. Because there was no expectation of support for other languages, Java was hardcoded in some places. In the development work related to this proposal, I will work with @MingYueQ to improve the abstraction part for multiple languages. |

|

As discussed offline let's re-open discussion after Ray summit when the Serve team has some more bandwidth. |

|

OK. During the period of the Ray Summit, we will also prepare more detailed information, including user guide, architecture design, benchmark, and so on. |

|

@edoakes Please review this topic again. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some comments inline. High level questions:

- How does this relate to the existing Ray C++ actor support? Will this require any extensions of it?

- Will we support the

DeploymentHandleinterface in C++? Or will applications be required to have a Python ingress deployment which may call into the C++ deployment? Please add an example of a Python deployment calling into a C++ one (including calling multiple different methods on it).

| similarity_service = serve.deployment(_func_or_class='SimilarityService::FactoryCreate', name='similarity_service', language='CPP') | ||

| rank_service = serve.deployment(_func_or_class='RankService::FactoryCreate', name='rank_service', language='CPP') | ||

|

|

||

| with InputNode() as input: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As discussed on the Java improvement REP, let's please update this to use bind( with DeploymentHandle calls instead of the call graph API (there should be no InputNode)

| RecommendService is a sequential invocation of other services without complex processing logic, so we can directly use the DAG ability to connect these services, eliminating the need for RecommendService and simplifying user logic. | ||

| Next, we start the Ray Serve runtime and use Python Serve API deploy these Service as Deployment: | ||

| ```python | ||

| feature_service = serve.deployment(_func_or_class='FeatureService::FactoryCreate', name='feature_service', language='CPP') |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks pretty ugly: it's using a private attribute (func_or_class). Maybe we can introduce a simple wrapper class like serve.CPPDeployment(factory_function)

|

Could you add some detail about this part to the REP please? What will be the deployment handle interface in C++ and roughly how would a shared core implementation work across the three languages (this part doesn't need to go into too much detail that can be left to implementation)? |

Signed-off-by: wangyingjie <[email protected]>

Signed-off-by: wangyingjie <[email protected]>

Signed-off-by: wangyingjie <[email protected]>

5f2fb61

to

5b9417e

Compare

Signed-off-by: wangyingjie <[email protected]>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@MingYueQ sorry about the delay, just got back from a long vacation! The proposal is looking good. I just have one more comment for you, see inline.

I will send this out to the Ray committers mailing list for broader feedback on Monday and let's try to get it approved by end of next week.

| - Init Ray Serve C++ project structure | ||

| - Create C++ Deployment through Python/Java API | ||

| - ServeHandler for C++ | ||

| - Accessing C++ Deployment using Python/Java/C++ ServeHandle or HTTP |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From my perspective I think supporting HTTP is a P0 requirement in order to really say that we support C++ as a language in Ray Serve.

Could you at least sketch a possible API that we could support here? It does not have to be part of the initial scope of implementation. But without having this I think the proposal is incomplete.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes.

Expose two primary APIs to users: SERVE_FUNC and SERVE_DEPLOYMENT, with a detailed explanation provided in the section titled "Register Function".

Furthermore, users can deploy services through these APIs, as exemplified in the "Converting to a Ray Serve Deployment" section.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am a bit confused -- how would a C++ deployment be directly exposed via HTTP? Or are you proposing that in order to expose it via HTTP it must be placed behind a Python deployment that calls into it via DeploymentHandle?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the example above, I see the RecommendService but this takes as arguments a std::string and an int. How are these parsed from an HTTP request? And how can an HTTP response be output?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The way of accessing via HTTP needs to be adjusted for this demo.

curl http://127.0.0.1:8000?request=computer&num=1

This is not sufficient because the type information for num=1 is lost in the http query. For cases with multiple input parameters, it is possible to transmit them as a JSON string in the HTTP body, for example:

curl -d '{"request": "computer", "num": 1}' http://127.0.0.1:8000

This way, the request flow is: -> HTTPProxy (access with DeploymentHandle) -> Cpp Deployment.

And currently, the HTTPProxy should be able to meet the requirements without any additional modifications.

@MingYueQ can adjust according to this approach.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are there any standard HTTP -> C++ struct parsing libraries that we could lean on here to help define the input/output formatting? For example in Python we use FastAPI to handle all of this. Otherwise this will become a rabbit hole (HTTP input parsing/output formatting could be a whole library in itself).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Currently in the investigation, if C++ Deployment wants to have the capability to map the path to the user register function, it needs to implement this functionality itself. A more native approach is to implement it using macros. Specifically:

The SERVE_DEPLOYMENT macro mentioned in the REP above originally functions to generate a <method_name, std::function> mapping. In order to maintain this capability, a separate cache needs to be generated to store <path, method_name>, so that the call chain becomes: path -> method_name -> std::function. This will result in a longer call chain. Therefore, it would be better to directly generate a <path, std::function> mapping in SERVE_DEPLOYMENT. Taking RankService as an example:

#pragma once

#include "api/serve_deployment.h"

namespace ray {

namespace serve {

class RankService {

public:

static RankService *FactoryCreate() {

return new RankService();

}

};

// Register function

SERVE_DEPLOYMENT("/rankService/factoryCreate", RankService::FactoryCreate);

} // namespace serve

} // namespace ray

In the SERVE_DEPLOYMENT, the first parameter is the path and the second parameter is the user function.

Signed-off-by: wangyingjie <[email protected]>

Signed-off-by: wangyingjie <[email protected]>

Summary

General Motivation

In scenarios such as search, inference, and others, system performance is of utmost importance. Taking the model inference and search engines of Ant Group as an example, these systems require high throughput, low latency, and high concurrency as they handle a massive amount of business. In order to meet these requirements, they have all chosen C++ for designing and developing their systems, ensuring high efficiency and stability. Currently, these systems plan to run on Ray Serve to enhance their distributed capabilities. Therefore, Ray Serve needs to provide C++ deployment so that users can easily deploy their services.

Should this change be within

rayor outside?main ray project. Changes are made to the Ray Serve level.

Stewardship

Required Reviewers

@sihanwang41

@edoakes

@akshay-anyscale

Shepherd of the Proposal (should be a senior committer)

@sihanwang41

@edoakes

@akshay-anyscale

Design and Architecture

Example Model

Taking the recommendation system as an example, when user inputs a search word, the system returns recommended words that are similar to it.

The RecommendService receives user requests and calls the FeatureService, SimilarityService, and RankService to calculate similar words, then return the results to users:

The FeatureService convert requests to vector:

The SimilarityService is used to calculate similarity:

The RankService is used to sort based on similarity:

This is the code that uses the RecommendService class:

In this way, all services need to be deployed together, which increases the system load and is not conducive to expansion.

Converting to a Ray Serve Deployment

Through Ray Serve, the core computing logic can be deployed as a scalable distributed service.

First, convert these Services to run on Ray Serve.

FeatureService:

SimilarityService:

RankService:

RecommendService is a sequential invocation of other services without complex processing logic, so we can directly use the DAG ability to connect these services, eliminating the need for RecommendService and simplifying user logic.

Next, we start the Ray Serve runtime and use Python Serve API deploy these Service as Deployment:

Calling Ray Serve Deployment with HTTP

Overall Design

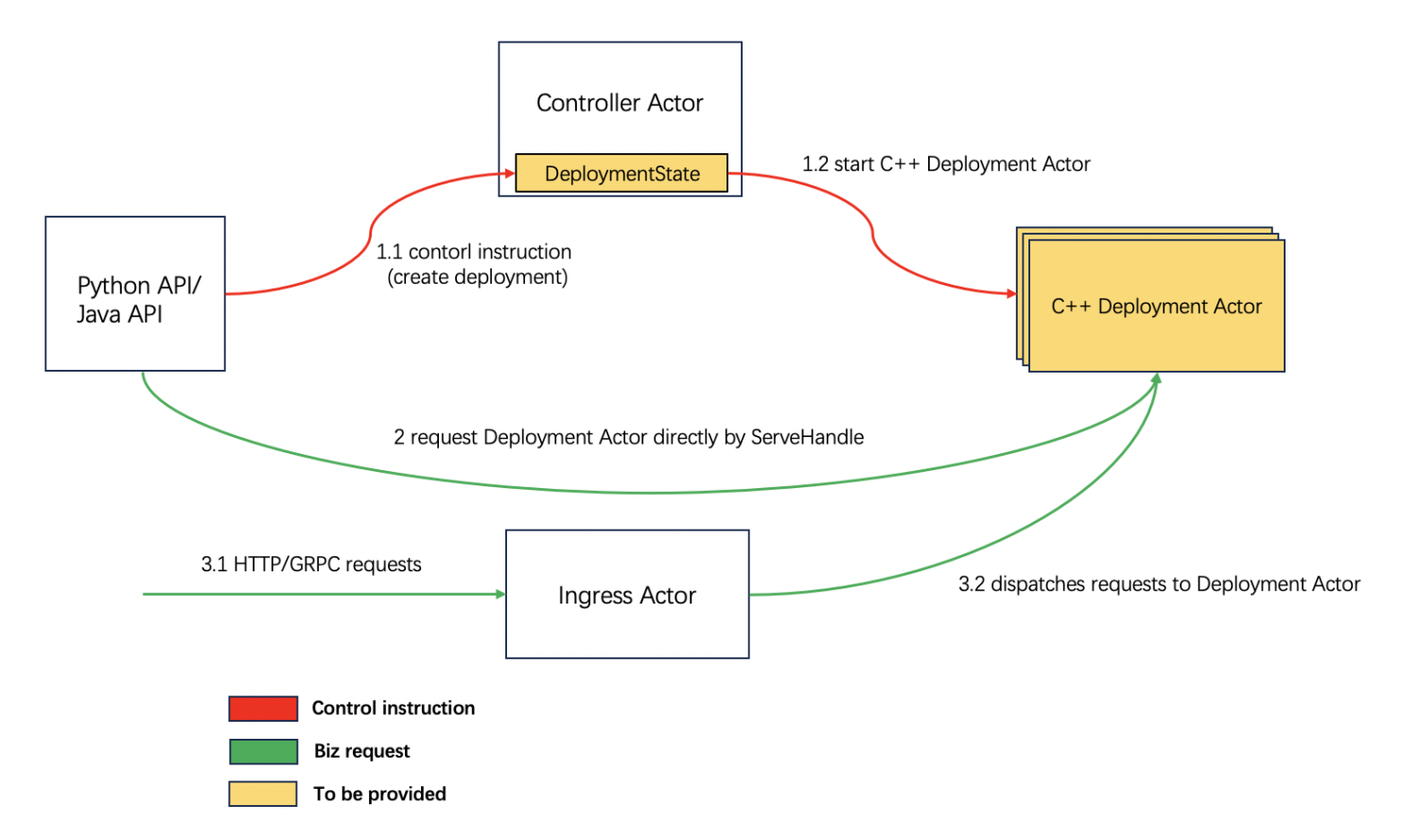

Ray Serve maintains Controller Actor and Ingress Actor. So these two roles are not related to the language of the user's choice. And they have the ability to manage cross-language deployments and route requests.

C++ Case Deduction

The businesses can send control commands to the Controller Actor of Ray Serve, which includes creating ingress, creating deployment, etc. When publishing C++ online services, the DeploymentState component needs to create C++ Deployment Actors. Users can call their business logic in Python/Java Driver, Ray task, or Ray Actor, and the requests will be dispatched to C++ Deployment Actors.

Package

C++ programs are typically compiled and packaged into three types of results: binary, static library, shared library.

In conclusion, the business needs to package the system as a shared library to run it on Ray Serve.

Register function

Ray Serve will add SERVE_FUNC and SERVE_DEPLOYMENT macros to publish user Service as Deployment.

SERVE_FUNC: Resolving overloaded function registration;

SERVE_DEPLOYMENT: Publishing user Service as Deployment.

Example:

Compatibility, Deprecation, and Migration Plan

The new feature is to add C++ deployment for Ray Serve, without modifying or deprecating existing functionalities. The changes to the Ray Serve API are also adding new capabilities.

Test Plan and Acceptance Criteria

(Optional) Follow-on Work